Troll Accounts / Troll Groups

Infodemic differentiates “Troll Accounts” and “Normal Accounts” by their behavior instead of content they promote. In Infodemic, we detect accounts that act together like they accepted top down commands, included but not limited to:

- Active time is synchronized, observed from the timeline of each accounts' activities in the social platform, such as posting, commenting, sharing.

- Active place is synchronized, the accounts are seen in the same places, and are unseen in other places. Seen in the same places means they are commenting, sharing, liking the same posts or sources.

- Inactive accounts are suddenly reactive at the same time.

Those top down, synchronized actions can be observed recurrently for long periods of time, from months to years. We call it “Collaborative Behavior”, accounts with collaborative behaviors are “Troll Accounts”, thay acting together become “Troll Groups”. Furthermore, we examine the synchronized accounts, discovered more suspicious characters of the accounts, included but not limited to:

- The account's profile picture was stolen from the internet, and lots of accounts using the same stolen profile pictures.

- There are different accounts within troll groups that change their profile pictures at the same time.

- There are accounts with Chinese names, replying in Chinese like a local, but only foreign content (usually from South East Asia countries) in their account timeline.

These suspicious characters implying these accounts were transferred from fake account creators. There is a sophisticated division of work in behind.

In Infodemic, we detect accounts that act synchronized. We calculated the degree of synchronization between each two account pairs, created a graph with nodes representing accounts and edges representing the degree of synchronization. We run community detection algorithms to the graph to find “Troll Groups”. Those accounts in groups are “Troll Accounts”, others are “Normal Accounts”.

There is statistical evidence showing how rare the recurrently observed high degree of synchronization between two identified troll account pairs compared to the random pairs. In addition, data scientists labeled the results with evidence to evaluate the accuracy of the algorithm.

Is it possible to observe some degree of synchronization between normal accounts with common interests and support the same people or opinions. However, the degree of synchronization is not as high as between troll accounts, because normal accounts also have different interests and support different people or opinions. Furthermore, the high degree of synchronization between troll accounts is for a long period of time, which is rare between normal accounts.

In Infodemic, we collected the activities of troll accounts for a long period of time, sorting out their target entities and narratives, as well as the stories they participated in. We can use these information to induce their objectives, and from objectives to infer the people or organizations behind it. Leverage public power with this preliminary evidence to request the social platform to provide deeper evidence, such as IP addresses, and then knowing the identity behind it.

In Infodemic, we collected the activities of troll accounts for a long period of time, as well as the activities of foreign organizations. And then calculate the degree of synchronization between troll accounts and foreign organizations, as the measurement of their relationship. foreign organizations include:

- Official Communication Channels: Channels officially used by a state and its representatives to deliver content. For example, official websites of a state or social media accounts of diplomatic services and embassies.

- State-Controlled Channels: Media channels with an official affiliation to a state-actor. They are majority-owned by a state or ruling party, managed by government- appointed bodies and they follow an editorial line imposed by state authorities.

- State-Linked Channels: Channels with no transparent links nor an official affiliation to a state actor but their attribution has been confirmed by organizations with access to privileged backend data sources, such as digital platforms, intelligence and cyber security entities, or by governments or military services based on classified information.

Entities / Sentiment / Narratives

In Infodemic, we collect all the contents published by troll accounts, detect mentioned entities, recognize the sentiment to the mentioned entities, positive, neutral, or negative. These contents are semantically clustered into groups as well. Next, we can organize the content by the conditions listed above, and then use a Large-Language-Model (LLM) to write summaries. These summaries are narratives promoted by troll accounts.

Events / Stories

In Infodemic, the news article and social media posts are clustered together into events, and then link the series of events into stories. The clustering criteria is learned from humans with a journalism background.

It is the number of news articles that are clustered together in the event / story.

It is the total number of activities in social platforms that are clustered together in the event / story. The activities are commenting right now, and will include posting, sharing, liking (and other interactions differ from social platforms) later.

It is similar to community volume(3-3) but initiated by troll accounts.

In the table of events / stories, the cumulative proportion of volume is the total volume accumulated from the first row to the row divided by the total volume of the table.

In Infodemic, you can know what stories have the most troll volume(3-4), and their cumulative proportion(3-5). Use this criteria to select important stories.

In Infodemic, we collected the activities of troll accounts for a long period of time, as well as the activities of foreign organizations. We also organize that information into events and stories. The national flag in the interface indicates the event / story has foreign information manipulation and interference. The definition of foreign organizations please refer here(1-6)。

Impact of Information Manipulation

In Infodemic, we collected the content published by troll accounts, as well as their source and spreading destination channels. We can know the target audience by analyzing the subscriber of these channels, and know the impact to public opinions by analyzing their response. We will release more sophisticated analyzing interfaces to show the result in our future version.

Data Sources

The data sources are the major news media and major social media platforms in each country or area.

Infodemic covered events and stories in English and Chinese, mostly in the USA , Taiwan and China. Expanding to other languages or countries is by request.

In Infodemic, the capacity of social data crawlers is limited by our computer resources and the rate limit by the social platforms. Under the crawling capacity limitation, our crawling policy is to crawl all the posts of impactful social accounts, as well as the corresponding interactions under the posts. The impact factor of a social account is its follower number and number of interactions of published posts. The initial list is from the ranking list of each social platform, and then expand the list by discovering accounts which interacted with accounts in the list.

Interface

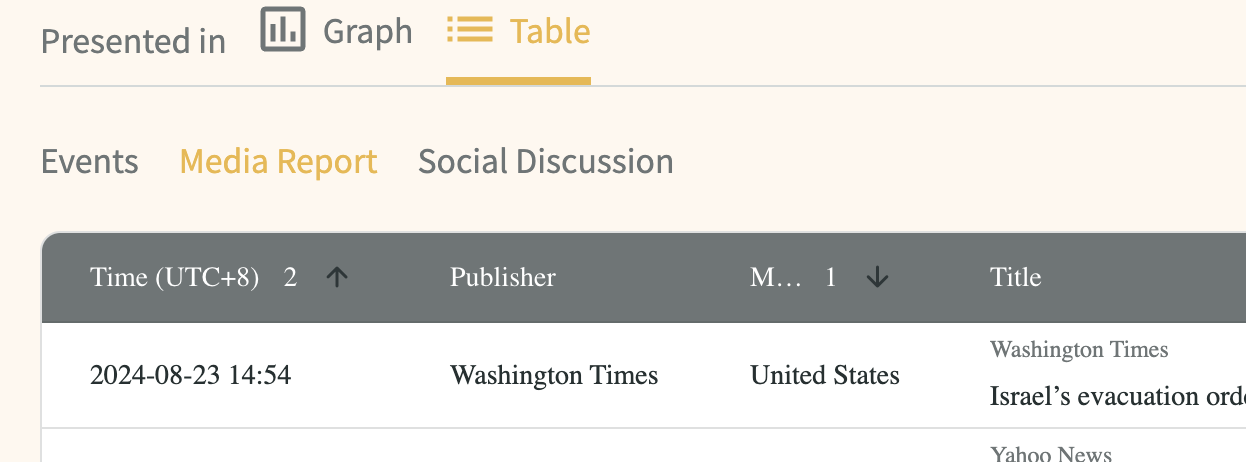

Click on the table header to sort by that column. You can tell whether it's sorted in ascending or descending order by the direction of the arrow.  Press and hold ‘Shift’ Key and click another column to sort multiple columns at the same time.

Press and hold ‘Shift’ Key and click another column to sort multiple columns at the same time.